HPC at Harvey Mudd College

Harvey Mudd College offers various High Performance Computing (HPC) resources to our faculty and students for their research and teaching. Please contact CIS Help Desk at helpdesk@hmc.edu for any questions about using any of the resources.

The Computer Science department also maintains a list of computing resources for students.

HPC Resources at HMC

- Rivendell cluster: A powerful HPC system built of nodes of varied configurations

- Hyper: The newest GPU workstation for the Math faculty

- Kepler: A GPGPU system (9.2 TFLOPS)

- ACCESS (Advanced Cyberinfrastructure Coordination Ecosystem: Services & Support): An NSF-funded access to a network of supercomputers

- LittleFe: A portable Beowulf mini-cluster for HPC education

[Rivendell cluster]

The Rivendell cluster in the Chemistry department consists of several nodes (almost a dozen) of distinct configurations added at different periods of times, out of which detailed information for two nodes named Gandalf and Galadriel is given below. It was built jointly by late Prof. Bob Cave in Chemistry and CIS and is currently being used by several faculty members at HMC. The system is configured with WebMO for web-based submission for jobs and various software commonly used in the Chemistry computing such as Gaussian, GROMACS, Q-Chem, Anaconda, etc.. The system is currently managed by CIS and for access, contact CIS Help Desk (helpdesk@hmc.edu) or ARCS team (arcs-l@g.hmc.edu).

Gandalf

- Server: Dell PowerEdge R730

- Number of Cores: 40 (2 x 20-CoreDual Intel E5-2698 v4, 2.2GHz, 50 MB SmartCache)

- GPU: 2 x Tesla K80

- Number of CUDA Cores: 9984 (2 X 4992)

- GPGPU Performance: 8.74 Tflops (peak single-precision performance) and 2.91 Tflops (peak double precision performance)

- Memory: 256 GB (DDR4 memory running at 2400 MHz Max)

- Storage: OS – 200GB, Data 21TB

- OS: CentOS Linux release 7.9.2009 (Core)

- Physical Location: CS Server Room

- Hostname: gandalf.chem.hmc.edu

- Year Built: 2018

- Applications: Webmo, gaussian16, schrodinger2019-3, pbspro 18.1.3, cfour, orca, q-chem, swig, gromacs, anaconda3

Galadriel

- Server: Dell PowerEdge R740

- Number of Cores: 48 (2 x 24-Core Dual Gold 5220R, 2.2GHz, 50 MB SmartCache)

- Memory: 384 GB

- Storage: OS 250 GB

- OS: CentOS Linux release 7.9.2009 (Core)

- Physical Location: CS Server Room

- Hostname: galadriel.chem.hmc.edu

- Year Built: 2020

- Applications: home and /opt/local (see above) mounted from gandalf

[Hyper]

Hyper (hyper.math.hmc.edu) is owned by Prof. Jamie Haddock and Prof. Heather Zinn-Brooks. It is primarily used for teaching and research in the mathematics department.

- Number of Cores: 32 (2 X 16-Core AMD EPYC 7313 Processor, 3GHz, 256 MiB cache)

- GPU: 8 X NVIDIA RTX A4000

- Number of CUDA Cores: 49152 (8 X 6144)

- GPGPU Performance: 157.6 Tflops (peak single-precision performance) and 4.79 Tflops (peak double precision performance)

- Memory: 1TiB (DDR4 memory running at 3200 MT/s)

- Storage: OS – 3.5 TB, Data 14TB

- OS: Ubuntu 20.04.6 LTS

- Physical Location: Shanahan building

- Hostname: hyper.math.hmc.edu

- Year Built: 2023

- Applications: JupyterHub, CUDA toolkit, anaconda, python (numpy, pandas, scipy, matplotlib, seaborn, scikit-learn,xgboost, lightgbm, opencv, requests, urlib3, pytorch), Julia, MATLAB

[GPGPU System]

Kepler (kepler.physics.hmc.edu) is a GPGPU system maintained by Prof. Vatche Sahakian in Physics. It has a RTX 2070 Super GPU and hosts JupyterHub server for teaching and research.

For access, contact Prof. Vatche Sahakian (Vatche_Sahakian@hmc.edu)

- Number of Cores: 8 (2 x Intel Xeon E5606 Quad-Core 2.13GHz, 8MB Cache)

- RAM: 24 GB (Operating at 1333MHz Max)

- GPU: 1x RTX2070 Super

- Number of CUDA Cores: 2560

- GPGPU Performance: 9.2 Tflops floating point computation

- OS: Linux (Ubuntu 20)

- HDD: 1TB Fixed Drive (3Gb/s, 7.2K RPM, 64MB Cache)

- Physical Location: Chemistry Lab

- Host Name: kepler.physics.hmc.edu

- Year Built: July 2012 (upgraded: 2020)

- Available Software: CUBLAS, CUDA LAPLACK libraries from NVIDIA, Mathematica 8, Anaconda3, JupyterHub

[ACCESS]

Harvey Mudd College makes use of national cyberinfrastructure called ACCESS (Advanced Cyberinfrastructure Coordination Ecosystem: Services & Support), an NSF-funded project that gives access to a network of supercomputers located at institutions across the United States. CIS has been participating in the Campus Champion program for XSEDE, the previous version of ACCESS. CIS helps faculty get allocations to use the computing resources made available by ACCESS and set up the computing environment and workflow. For more information, please email the CIS Help Desk at helpdesk@hmc.edu.

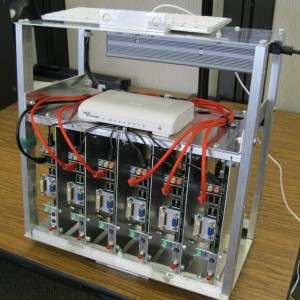

[HPC Education System]

LittleFe (littlefe.cs.hmc.edu) is a portable Beowulf cluster built for HPC education. Harvey Mudd College won a LittleFe unit in September 2012 for the LittleFe Buildout event at SuperComputing 2012 (SC12). LittleFe comes with a pre-configured Linux environment with all the necessary libraries for developing and testing parallel applications such as shared memory parallel processing (OpenMP), distributed memory parallel processing (MPI) and GPGPU parallel processing (CUDA). It can be used for parallel processing and computer architecture courses.

To use the LittleFe unit for your class, contact CIS Help Desk (helpdesk@hmc.edu).

- Number of Cores: 12 (6 x Dual-Core Intel Atom ION2 at 1.5 GHz)

- RAM: 2GB DDR2 800 RAM per node

- OS: Linux (BCCD 3.1.1)

- HDD: 160 GB Fixed Drive (7.2K RPM, 2.5” SATA HDD)

- Physical Location: CS Server Room (Portable)

- Host Name: littlefe.cs.hmc.edu

- Month and Year Built: August 2012

- Manufacturer: LittleFe Project

- Software Available: MPI, OpenMP, CUDA, System Benchmarks (GalaxSee, Life, HPL-Benchmark, Parameter Space)